Waiting in dOSEK

Date: 2015-02-24

In my current research, I'm working on the dOSEK operating system. dOSEK is dependable operating system that implements the OSEK standard for embedded real-time systems. dOSEK is designed to be resilient against soft-errors (transient hardware faults, bit flipts) in memory and the registers (A paper on RTAS'15 will be published soon). In this blog post, I will describe how we implemented a branchless version of OSEK events, and integrated it in our system. First, I will give a short overview about the scheduling primitive used in dOSEK.

Scheduling in dOSEK

The core of dOSEK is the priority-driven scheduler. OSEK is a static operating-system standard; for a specific system, we know exactly how many threads exists. This number will never change, it is configured at compile time. The scheduler selects always the thread with the highest priority that is runnable and executes it. In dOSEK, a thread is runnable, if its priority is larger than the priority of the idle thread. In pseudocode, the scheduler/dispatcher looks like this:

schedule() {

current_thread = idle_id;

current_prio = idle_prio;

updateMax((current_thread, current_prio),

(thread_1_id, thread_1_prio));

updateMax((current_thread, current_prio),

(thread_2_id, thread_2_prio));

updateMax((current_thread, current_prio),

(thread_3_id, thread_3_prio));

switch_to_thread(current_thread);

}

The scheduler is generated for the specific system (in this case, for a

system with 3 threads), and contains a updateMax() cascade.

updateMax() is a hardened operation, that updates the first input

tuple with the second one, iff the priority of the second argument-tuple

(second tuple, second item) is higher than the priority of the first

tuple. In the first cascade element, current_thread is set to

thread_1_id, if current_prio \< thread_1_prio. In pseudocode:

updateMax((a, b), (c, d)) {

if (b < d) {

(a, b) = (c, d);

}

}

Events in OSEK

In OSEK, events are the only possibility for a thread to wait on

something. Each thread can receive a number of event signals. With the

system call WaitEvent(), a thread can wait for one or more events to

happen. If any of the events from the list got signaled by another

thread with SetEvent, the waiting thread unblocks. Signals are not

automatically cleared, but must be cleared explicitly by ClearEvent.

A version with branches can be implemented by two bitmasks:

struct Thread {

...

event_mask_t events_waiting;

event_mask_t events_set;

...

};

SetEvent(Thread t, event_mask_t m) {

t.event_set |= m;

}

WaitEvent(Thread t, event_mask_t m) {

t.event_waiting = m;

}

ClearEvent(Thread t, event_mask_t m) {

// Remove the event mask bitwise

t.event_waiting &= ~m;

t.event_set &= ~m;

}

Schedule() {

...

if (thread_1.event_waiting != 0

|| (thread_1.event_waiting & thread_1.event_set) != 0) {

updateMax((current_thread, current_prio),

(thread_1_id, thread_1_prio));

}

In this simple variant, we maintain a event_waiting mask, which

maintains a bitmask of events a specific thread is waiting for. The

event_set bitmask holds a bitmask of signaled events. If a thread is

waiting, and none of the waited signals is set, we exclude the thread

from the updateMax() cascade. It is get blocked.

But there is one problem here, with dependability: we have branches. Branches are evil; makeing them resilent against soft-errors is hard. Therefore, we want to have a branchless version of it.

Events in dOSEK

Shortly explained, in the branchless version, we let the priority of a

thread drop below the idle~priority~, if it currently blocks. Therefore,

we calculate a blocking term for every thread that is either zero or the

highest priority in the system. This blocking term is substracted from

the thread priority, when calling updateMax():

updateMax((current_thread, current_prio),

(thread_1_id, thread_1_prio - blocking_term));

For each event, a thread can receive, we have two integer variables W

(for waiting) and S (for set). Both variables can have two values:

either 0 or High (for highest priority in the system).

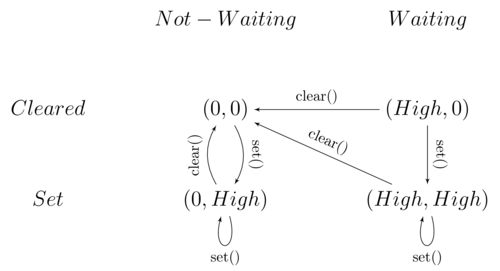

In this diagram, we see all four states a event can have. A event is a

tuple of (W, S). The set() and clear() operations set override the

tuple. If we want to wait for an event mask, we set the W flag

accordingly for all events a thread can wait for:

struct Event {

int W;

int S;

};

Event thread_1_event_a;

Event thread_1_event_b;

...

WaitEvent(Thread t, event_mask_t m) {

// t is always known at compile time, and this cascade is generated for the system.

if (t == thread_1) {

if (m & 1)

thread_1_event_a.W = High;

else

thread_1_event_a.W = 0;

if (m & 2)

thread_1_event_b.W = High;

else

thread_1_event_b.W = 0;

}

}

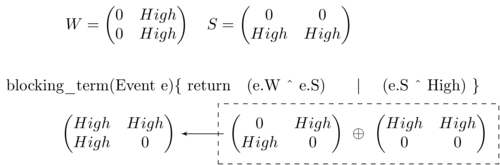

But how can we now deduce the blocking_term from the event states?

First we calculate the blocking_term for a single event. We use a

matrix notation that captures all four states from the diagram shown

before.

By the blocking term function, we generate a term for each event that is

only 0, if the event was used for blocking and is set. In all other

cases, the blocking term is High. We achieved this by using only

bitwise XOR an OR operation. We're still branchless! :-)

We combine now all blocking terms of all events a specific task can wait

for with AND. So the result is only then zero if at least single event,

which is on the waiting list, is set. Furthermore, we determine whether

we can block in the first place, by combining all W states with OR.

The should_wait variable is either High, if we're waiting, or 0 if

we're not waiting.

does_block = blocking_term(thread_1_event_a) & blocking_term(thread_1_event_b); should_wait = thread_1_event_a.W | thread_1_event_b.W; blocking_term = should_wait & blocking_term;

Combining both variables with AND, we achieve our blocking term.

Branchless. And we can subtract it, before we call the updateMax(),

from the threads priority.